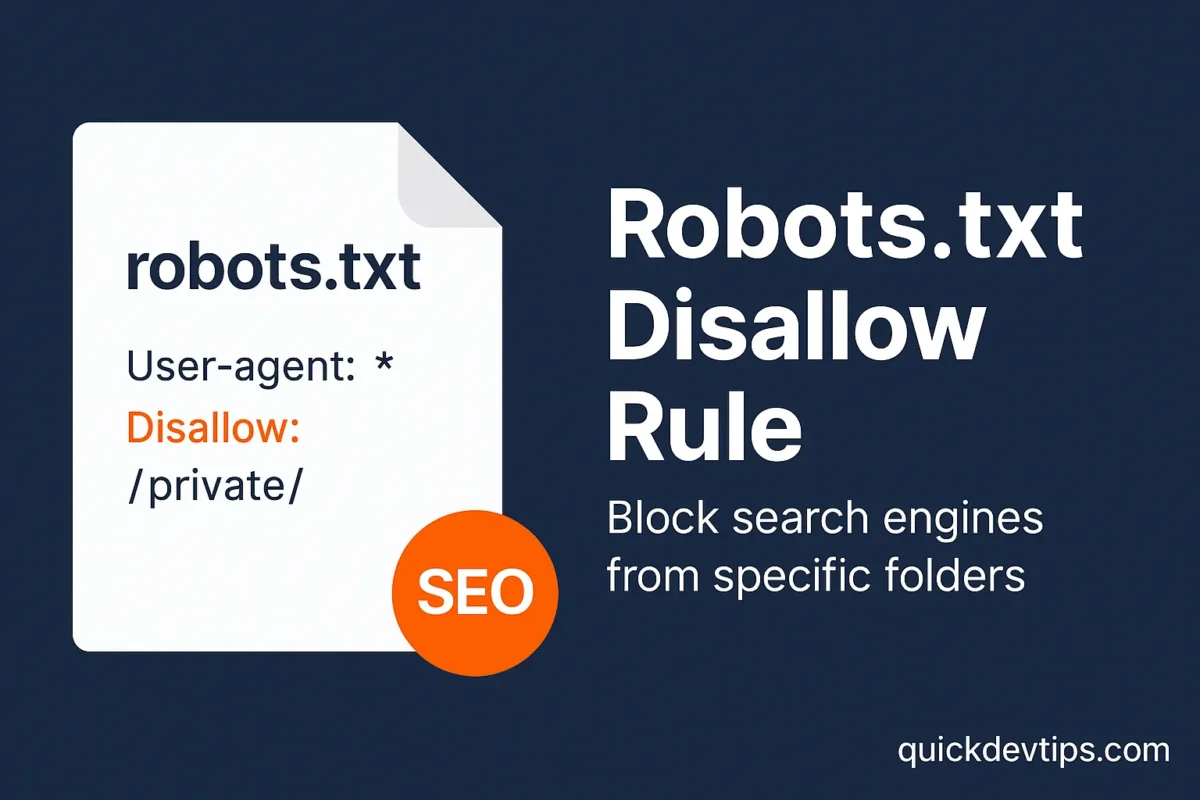

Robots.txt Disallow Rule — Block Search Engines from Specific Folders

Introduction Robots.txt Disallow Rule is used to tell search engines which parts of your website they should not crawl. It’s a simple yet powerful SEO control method for restricting access to specific folders or pages. Robots.txt Disallow Rule Example How It Works The robots.txt file sits at the root of your website. The Disallow directive … Read more