Introduction

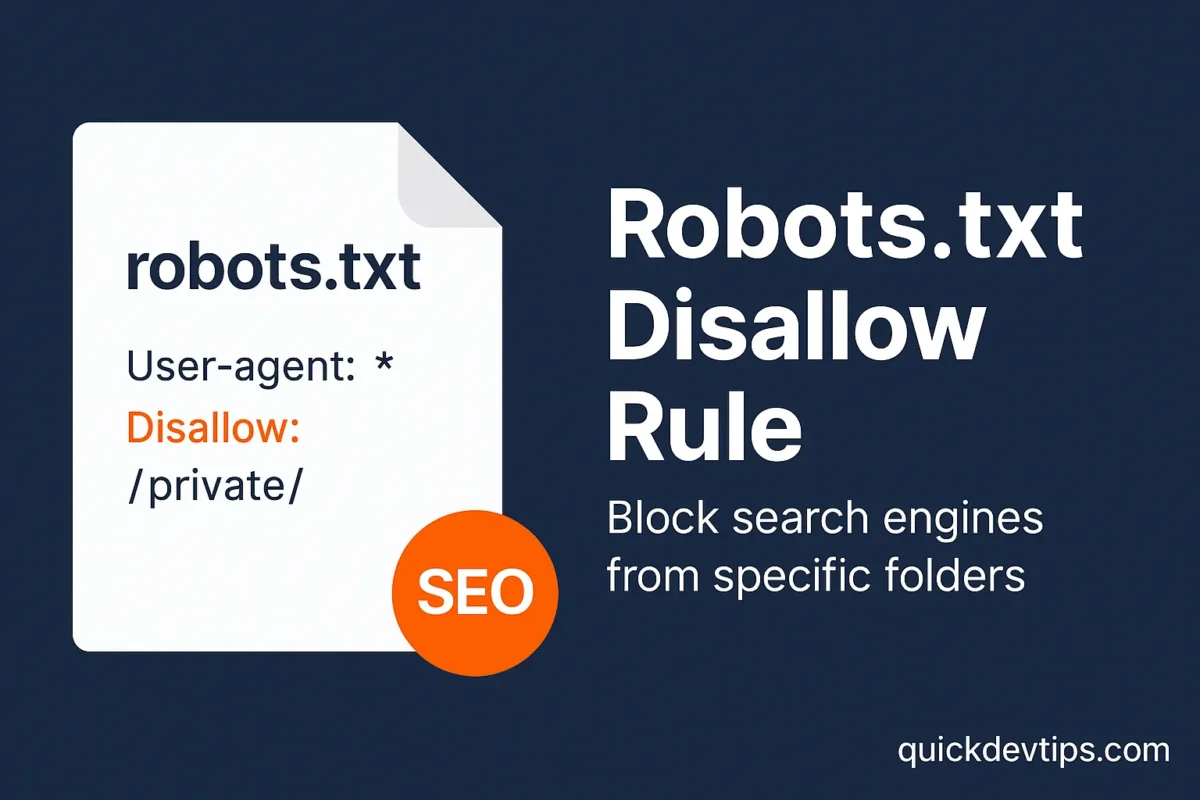

Robots.txt Disallow Rule is used to tell search engines which parts of your website they should not crawl. It’s a simple yet powerful SEO control method for restricting access to specific folders or pages.

Robots.txt Disallow Rule Example

User-agent: *

Disallow: /private/

Disallow: /tmp/

How It Works

The robots.txt file sits at the root of your website. The Disallow directive blocks bots from crawling listed directories or pages, helping you manage sensitive or irrelevant content.

Why Use This?

Use Robots.txt Disallow Rule to keep admin areas, duplicate content, or temporary files out of search results. This improves crawl efficiency for important pages.

Common Mistake

Blocking critical resources like /css/ or /js/ can break how Google renders your site. Never block essential assets required for page display.

Pro Tip

Always check your robots.txt file with Google Search Console’s URL Inspection tool to confirm that only the intended paths are blocked.